Microsoft Word For Mac 2011 Text To Speech

- Microsoft Word For Mac 2011 Text To Speech Pdf

- Microsoft Word For Mac 2011 Text To Speech Download

- Free Word For Mac 2011

- Microsoft Word For Mac 2011 Text To Speech Online

macOS Catalina introduces Voice Control, a new way to fully control your Mac entirely with your voice. Voice Control uses the Siri speech-recognition engine to improve on the Enhanced Dictation feature available in earlier versions of macOS.1

How to turn on Voice Control

Dictate text using Speech Recognition. 2016 Publisher 2016 Excel 2010 Word 2010 Outlook 2010 PowerPoint 2010 Publisher 2010 Excel 2016 for Mac PowerPoint 2016 for Mac Word 2016 for Mac More. Speech-to-text can be used with other input modalities to type using your voice. Additionally, your operating system may have built-in.

After upgrading to macOS Catalina, follow these steps to turn on Voice Control:

Learn how to keep in touch and stay productive with Microsoft Teams and Office 365, even when you’re working remotely. Dictate text using Speech Recognition. Content provided by Microsoft. To dictate text to your Windows PC. For example, you can dictate text to fill out online forms; or you can dictate text to a word. Boxes appear instead of text in a Word for Mac 2011 document. Content provided by Microsoft. You open a document that contains Korean fonts in Word for Mac 2011. The document was created on Word for Mac 2008 or Word 2010. To fix this issue, do this: Open the document that displays boxes instead of text. Nov 07, 2019 How to Activate Text to Speech in Mac OS X. WikiHow is a “wiki,” similar to Wikipedia, which means that many of our articles are co-written by multiple.

2014-6-12 Hi Clark. In the past I have tried turning on SSL on both the pop and Imap accounts and here is what i get. 5.7.1: Client host rejected: Access denied. Cannot send mail. The SMTP server does not recognize any of the authentication methods supported by Outlook. Download this app from Microsoft Store for Windows 10, Windows 10 Mobile, Windows Phone 8.1, Windows 10 Team (Surface Hub), HoloLens. See screenshots, read the latest customer reviews, and compare ratings for Convert Text to Speech. Oct 07, 2019 macOS Catalina introduces Voice Control, a new way to fully control your Mac entirely with your voice. Voice Control uses the Siri speech-recognition engine to improve on the Enhanced Dictation feature available in earlier versions of macOS.

- Choose Apple menu > System Preferences, then click Accessibility.

- Click Voice Control in the sidebar.

- Select Enable Voice Control. When you turn on Voice Control for the first time, your Mac completes a one-time download from Apple.2

Voice Control preferences

When Voice Control is enabled, you see an onscreen microphone representing the mic selected in Voice Control preferences.

To pause Voice Control and stop it from from listening, say 'Go to sleep' or click Sleep. To resume Voice Control, say or click 'Wake up'.

How to use Voice Control

Get to know Voice Control by reviewing the list of voice commands available to you: say 'Show commands' or 'Show me what I can say'. The list varies based on context, and you may discover variations not listed. To make it easier to know whether Voice Control heard your phrase as a command, you can select 'Play sound when command is recognised' in Voice Control preferences.

Basic navigation

Voice Control recognises the names of many apps, labels, controls and other onscreen items, so you can navigate by combining those names with certain commands. Here are some examples:

- Open Pages: 'Open Pages'. Then create a new document: 'Click New Document'. Then choose one of the letter templates: 'Click Letter. Click Classic Letter'. Then save your document: 'Save document'.

- Start a new message in Mail: 'Click New Message'. Then address it: 'John Appleseed'.

- Turn on Dark Mode: 'Open System Preferences. Click General. Click Dark'. Then quit System Preferences: 'Quit System Preferences' or 'Close window'.

- Restart your Mac: 'Click Apple menu. Click Restart' (or use the number overlay and say 'Click 8').

You can also create your own voice commands.

Number overlays

Use number overlays to quickly interact with parts of the screen that Voice Control recognises as clickable, such as menus, checkboxes and buttons. To turn on number overlays, say 'Show numbers'. Then just say a number to click it.

Number overlays make it easy to interact with complex interfaces, such as web pages. For example, in your web browser you could say 'Search for Apple stores near me'. Then use the number overlay to choose one of the results: 'Show numbers. Click 64'. (If the name of the link is unique, you might also be able to click it without overlays by saying 'Click' and the name of the link.)

Voice Control automatically shows numbers in menus and wherever you need to distinguish between items that have the same name.

Grid overlays

Use grid overlays to interact with parts of the screen that don't have a control, or that Voice Control doesn't recognise as clickable.

Say 'Show grid' to show a numbered grid on your screen, or 'Show window grid' to limit the grid to the active window. Say a grid number to subdivide that area of the grid, and repeat as needed to continue refining your selection.

To click the item behind a grid number, say 'Click' and the number. Or say 'Zoom' and the number to zoom in on that area of the grid, then automatically hide the grid. You can also use grid numbers to drag a selected item from one area of the grid to another: 'Drag 3 to 14'.

To hide grid numbers, say 'Hide numbers'. To hide both numbers and grid, say 'Hide grid'.

Dictation

When the cursor is in a document, email message, text message or other text field, you can dictate continuously. Microsoft lifecam cinema mac settings free. Dictation converts your spoken words into text.

- To enter a punctuation mark, symbol or emoji, just speak its name, such as 'question mark' or 'per cent sign' or 'happy emoji'. These may vary by language or dialect.

- To move around and select text, you can use commands such as 'Move up two sentences' or 'Move forward one paragraph' or 'Select previous word' or 'Select next paragraph'.

- To format text, try 'Bold that' or 'Capitalise that', for example. Say 'numeral' to format your next phrase as a number.

- To delete text, you can choose from many delete commands. For example, say 'delete that' and Voice Control knows to delete what you just typed. Or say 'Delete all' to delete everything and start over.

Voice Control understands contextual cues, so you can seamlessly transition between text dictation and commands. For example, to dictate and then send a birthday greeting in Messages, you could say 'Happy Birthday. Click Send.' Or to replace a phrase, say 'Replace I’m almost there with I just arrived'.

You can also create your own vocabulary for use with dictation.

Create your own voice commands and vocabulary

Create your own voice commands

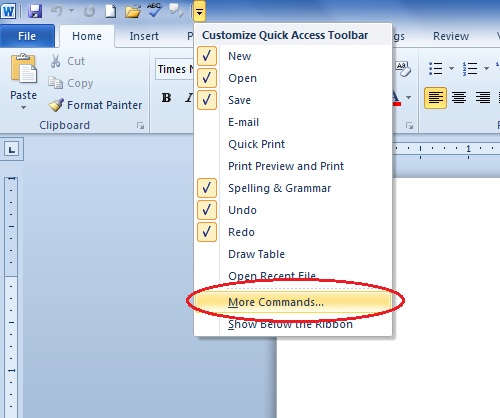

- Open Voice Control preferences, such as by saying 'Open Voice Control preferences'.

- Click Commands or say 'Click Commands'. The complete list of all commands opens.

- To add a new command, click the add button (+) or say 'Click add'. Then configure these options to define the command:

- When I say: Enter the word or phrase that you want to be able to speak to perform the action.

- While using: Choose whether your Mac performs the action only when you're using a particular app.

- Perform: Choose the action to perform. You can open a Finder item, open a URL, paste text, paste data from the clipboard, press a keyboard shortcut, select a menu item or run an Automator workflow.

- Use the checkboxes to turn commands on or off. You can also select a command to find out whether other phrases work with that command. For example, 'Undo that' works with several phrases, including 'Undo this' and 'Scratch that'.

To quickly add a new command, you can say 'Make this speakable'. Voice Control will help you configure the new command based on the context. For example, if you speak this command while a menu item is selected, Voice Control helps you make a command for choosing that menu item.

Create your own dictation vocabulary

- Open Voice Control preferences, such as by saying 'Open Voice Control preferences'.

- Click Vocabulary, or say 'Click Vocabulary'.

- Click the add button (+) or say 'Click add'.

- Type a new word or phrase as you want it to be entered when spoken.

Learn more

- For the best performance when using Voice Control with a Mac notebook computer and an external display, keep your notebook lid open or use an external microphone.

- All audio processing for Voice Control happens on your device, so your personal data is always kept private.

- Use Voice Control on your iPhone or iPod touch.

- Learn more about accessibility features in Apple products.

1. Voice Control uses the Siri speech-recognition engine for U.S. English only. Other languages and dialects use the speech-recognition engine previously available with Enhanced Dictation.

2. If you're on a business or school network that uses a proxy server, Voice Control might not be able to download. Have your network administrator refer to the network ports used by Apple software products.

-->This article presents the new Speech API and shows how to implement it in a Xamarin.iOS app to support continuous speech recognition and transcribe speech (from live or recorded audio streams) into text.

New to iOS 10, Apple has release the Speech Recognition API that allows an iOS app to support continuous speech recognition and transcribe speech (from live or recorded audio streams) into text.

According to Apple, the Speech Recognition API has the following features and benefits:

- Highly Accurate

- State of the Art

- Easy to Use

- Fast

- Supports Multiple Languages

- Respects User Privacy

How Speech Recognition Works

Speech Recognition is implemented in an iOS app by acquiring either live or pre-recorded audio (in any of the spoken languages that the API supports) and passing it to a Speech Recognizer which returns a plain-text transcription of the spoken words.

Keyboard Dictation

When most users think of Speech Recognition on an iOS device, they think of the built-in Siri voice assistant, which was released along with Keyboard Dictation in iOS 5 with the iPhone 4S.

Keyboard Dictation is supported by any interface element that supports TextKit (such as UITextField or UITextArea) and is activated by the user clicking the Dictation Button (directly to the left of the spacebar) in the iOS virtual keyboard.

Apple has released the following Keyboard Dictation statistics (collected since 2011):

- Keyboard Dictation has been widely used since it was released in iOS 5.

- Approximately 65,000 apps use it per day.

- About a third of all iOS Dictation is done in a 3rd party app.

Keyboard Dictation is extremely easy to use as it requires no effort on the developer's part, other than using a TextKit interface element in the app's UI design. Keyboard Dictation also has the advantage of not requiring any special privilege requests from the app before it can be used.

Apps that use the new Speech Recognition APIs will require special permissions to be granted by the user, since speech recognition requires the transmission and temporary storage of data on Apple's servers. Please see our Security and Privacy Enhancements documentation for details.

While Keyboard Dictation is easy to implement, it does come with several limitations and disadvantages:

- It requires the use of a Text Input Field and display of a keyboard.

- It works with live audio input only and the app has no control over the audio recording process.

- It provides no control over the language that is used to interpret the user's speech.

- There is no way for the app to know if the Dictation button is even available to the user.

- The app cannot customize the audio recording process.

- It provides a very shallow set of results that lacks information such as timing and confidence.

Speech Recognition API

New to iOS 10, Apple has released the Speech Recognition API which provides a more powerful way for an iOS app to implement speech recognition. This API is the same one that Apple uses to power both Siri and Keyboard Dictation and it is capable of providing fast transcription with state of the art accuracy.

The results provided by the Speech Recognition API are transparently customized to the individual users, without the app having to collect or access any private user data.

The Speech Recognition API provides results back to the calling app in near real-time as the user is speaking and it provides more information about the results of translation than just text. These include:

- Multiple interpretations of what the user said.

- Confidence levels for the individual translations.

- Timing information.

As stated above, audio for translation can be provided either by a live feed, or from pre-recorded source and in any of the over 50 languages and dialects supported by iOS 10.

The Speech Recognition API can be used on any iOS device running iOS 10 and in most cases, requires a live internet connection since the bulk of the translations takes place on Apple's servers. That said, some newer iOS devices support always on, on-device translation of specific languages.

Apple has included an Availability API to determine if a given language is available for translation at the current moment. The app should use this API instead of testing for internet connectivity itself directly.

As noted above in the Keyboard Dictation section, speech recognition requires the transmission and temporary storage of data on Apple's servers over the internet, and as such, the app must request the user's permission to perform recognition by including the NSSpeechRecognitionUsageDescription key in its Info.plist file and calling the SFSpeechRecognizer.RequestAuthorization method.

Based on the source of the audio being used for Speech Recognition, other changes to the app's Info.plist file may be required. Please see our Security and Privacy Enhancements documentation for details.

Adopting Speech Recognition in an App

There are four major steps that the developer must take to adopt speech recognition in an iOS app:

- Provide a usage description in the app's

Info.plistfile using theNSSpeechRecognitionUsageDescriptionkey. For example, a camera app might include the following description, 'This allows you to take a photo just by saying the word 'cheese'.' - Request authorization by calling the

SFSpeechRecognizer.RequestAuthorizationmethod to present an explanation (provided in theNSSpeechRecognitionUsageDescriptionkey above) of why the app wants speech recognition access to the user in a dialog box and allow them to accept or decline. - Create a Speech Recognition Request:

- For pre-recorded audio on disk, use the

SFSpeechURLRecognitionRequestclass. - For live audio (or audio from memory), use the

SFSPeechAudioBufferRecognitionRequestclass.

- For pre-recorded audio on disk, use the

- Pass the Speech Recognition Request to a Speech Recognizer (

SFSpeechRecognizer) to begin recognition. The app can optionally hold onto the returnedSFSpeechRecognitionTaskto monitor and track the recognition results.

These steps will be covered in detail below.

Providing a Usage Description

To provide the required NSSpeechRecognitionUsageDescription key in the Info.plist file, do the following:

Double-click the

Info.plistfile to open it for editing.Switch to the Source view:

Click on Add New Entry, enter

NSSpeechRecognitionUsageDescriptionfor the Property,Stringfor the Type and a Usage Description as the Value. For example:If the app will be handling live audio transcription, it will also require a Microphone Usage Description. Click on Add New Entry, enter

NSMicrophoneUsageDescriptionfor the Property,Stringfor the Type and a Usage Description as the Value. For example:Save the changes to the file.

Double-click the

Info.plistfile to open it for editing.Click on Add New Entry, enter

NSSpeechRecognitionUsageDescriptionfor the Property,Stringfor the Type and a Usage Description as the Value. For example:If the app will be handling live audio transcription, it will also require a Microphone Usage Description. Click on Add New Entry, enter

NSMicrophoneUsageDescriptionfor the Property,Stringfor the Type and a Usage Description as the Value. For example:2020-2-12 In Microsoft Word 2010 Leveled Numbering list turns to black box. I searched some forums and got the fix, please follow the below points. Put your cursor on the heading just right of the black box; Use the left arrow key on your keyboard to move left until the black box turns grey. Black box heading microsoft word mac. 2015-12-10 MS Word heading styles are showing black boxes instead of numbers-how can I fix this? I modified the default headers for a large document. They looked fine when I set them up, but after I saved the document and came back to it, some of the outline numbering showed up as black boxes.

Save the changes to the file.

Important

Failing to provide either of the above Info.plist keys (NSSpeechRecognitionUsageDescription or NSMicrophoneUsageDescription) can result in the app failing without warning when trying to access either Speech Recognition or the microphone for live audio.

Requesting Authorization

To request the required user authorization that allows the app to access speech recognition, edit the main View Controller class and add the following code:

The RequestAuthorization method of the SFSpeechRecognizer class will request permission from the user to access speech recognition using the reason that the developer provided in the NSSpeechRecognitionUsageDescription key of the Info.plist file.

A SFSpeechRecognizerAuthorizationStatus result is returned to the RequestAuthorization method's callback routine that can be used to take action based on the user's permission.

Important

Apple suggests waiting until the user has started an action in the app that requires speech recognition before requesting this permission.

Recognizing Pre-Recorded Speech

If the app wants to recognize speech from a pre-recorded WAV or MP3 file, it can use the following code:

Looking at this code in detail, first, it attempts to create a Speech Recognizer (SFSpeechRecognizer). If the default language isn't supported for speech recognition, null is returned and the functions exits.

If the Speech Recognizer is available for the default language, the app checks to see if it is currently available for recognition using the Available property. For example, recognition might not be available if the device doesn't have an active internet connection.

A SFSpeechUrlRecognitionRequest is created from the NSUrl location of the pre-recorded file on the iOS device and it is handed to the Speech Recognizer to process with a callback routine.

When the callback is called, if the NSError isn't null there has been an error that must be handled. Because speech recognition is done incrementally, the callback routine may be called more than once so the SFSpeechRecognitionResult.Final property is tested to see if the translation is complete and best version of the translation is written out (BestTranscription).

Recognizing Live Speech

If the app wants to recognize live speech, the process is very similar to recognizing pre-recorded speech. For example:

Microsoft Word For Mac 2011 Text To Speech Pdf

Looking at this code in detail, it creates several private variables to handle the recognition process:

It uses AV Foundation to record audio that will be passed to a SFSpeechAudioBufferRecognitionRequest to handle the recognition request:

The app attempts to start recording and any errors are handled if the recording cannot be started:

The recognition task is started and a handle is kept to the Recognition Task (SFSpeechRecognitionTask):

The callback is used in a similar fashion to the one used above on pre-recorded speech.

If recording is stoped by the user, both the Audio Engine and the Speech Recognition Request are informed:

If the user cancels recognition, then the Audio Engine and Recognition Task are informed:

It is important to call RecognitionTask.Cancel if the user cancels the translation to free up both memory and the device's processor.

Important

Failing to provide the NSSpeechRecognitionUsageDescription or NSMicrophoneUsageDescriptionInfo.plist keys can result in the app failing without warning when trying to access either Speech Recognition or the microphone for live audio (var node = AudioEngine.InputNode;). Please see the Providing a Usage Description section above for more information.

Speech Recognition Limits

Apple imposes the following limitations when working with Speech Recognition in an iOS app:

- Speech Recognition is free to all apps, but its usage is not unlimited:

- Individual iOS devices have a limited number of recognitions that can be performed per day.

- Apps will be throttled globally on a request-per-day basis.

- The app must be prepared to handle Speech Recognition network connection and usage rate limit failures.

- Speech Recognition can have a high cost in both battery drain and high network traffic on the user's iOS device, because of this, Apple imposes a strict audio duration limit of approximately one minute of speech max.

If an app is routinely hitting its rate-throttling limits, Apple asks that the developer contact them.

Privacy and Usability Considerations

Microsoft Word For Mac 2011 Text To Speech Download

Apple has the following suggestion for being transparent and respecting the user's privacy when including Speech Recognition in an iOS app:

- When recording the user's speech, be sure to clearly indicate that recording is taking place in the app's User Interface. For example, the app might play a 'recording' sound and display a recording indicator.

- Don't use Speech Recognition for sensitive user information such as passwords, health data or financial information.

- Show the recognition results before acting on them. This not only provides feedback as to what the app is doing, but allows the user to handle recognition errors as they are made.

Free Word For Mac 2011

Summary

Microsoft Word For Mac 2011 Text To Speech Online

This article has presented the new Speech API and showed how to implement it in a Xamarin.iOS app to support continuous speech recognition and transcribe speech (from live or recorded audio streams) into text.